Emerging industries in a competitive environment move so quickly and compete so vigorously that they end up sharing a common challenge: everyone is so excited to share their progress and communicate its value that they sometimes forget to stop and define key terms. This might seem like a trivial challenge, but it’s not. Poorly-defined terminology slows down adoption due to confusion, and ultimately makes it harder for everyone in the market to understand the space.

In the case of the quantum computing industry, this challenge is clearly demonstrated by the terms “logical qubits” and “fault tolerance”. Both of these terms are critical to the long-term future of the industry; everyone wants them, some people might have them, and no one can agree on what exactly counts as “demonstrating” useful logical qubits or fault-tolerant quantum computing.

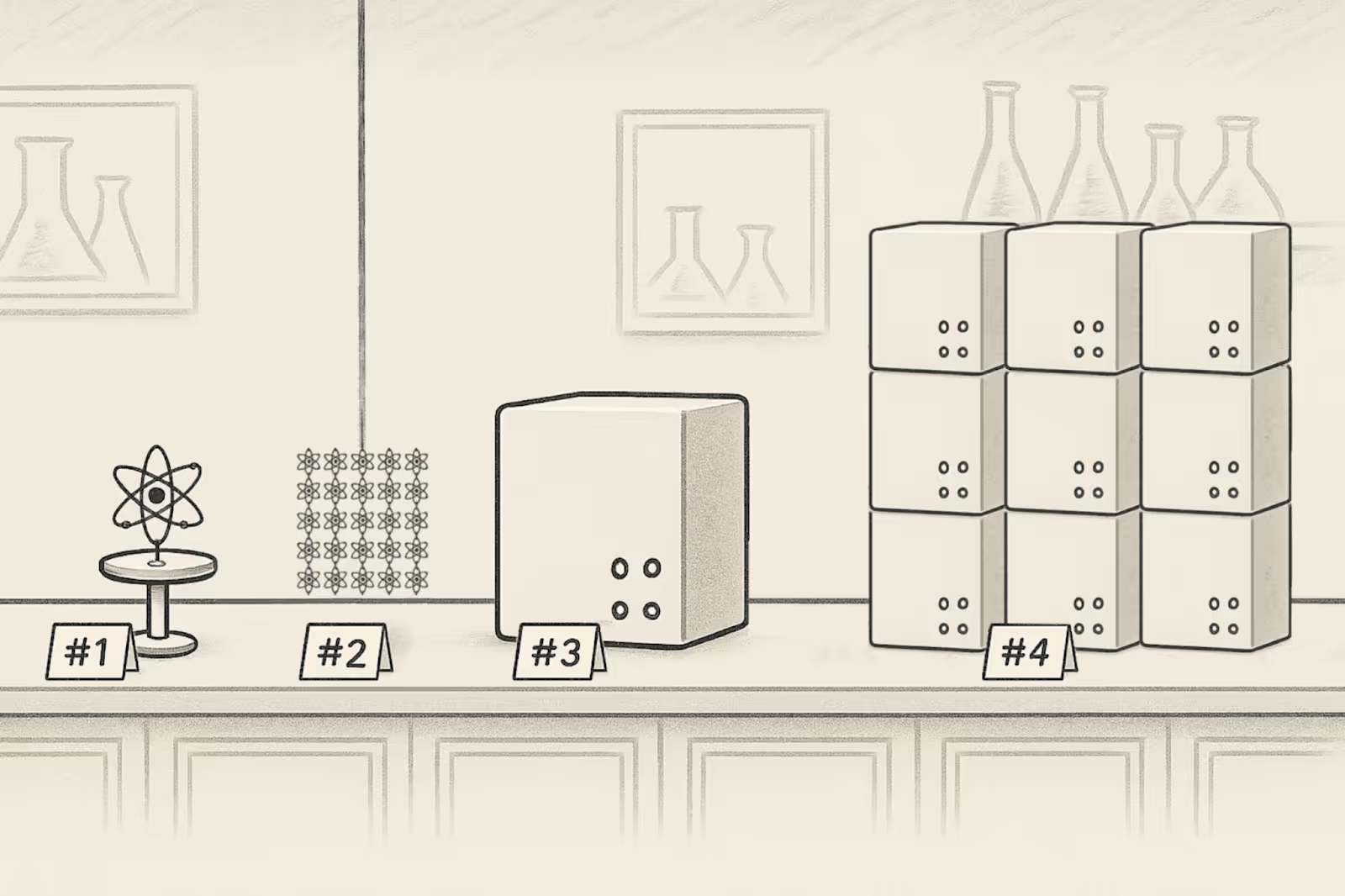

This article is an attempt to improve this situation. We first review the historical development of quantum error correction and fault-tolerant quantum computing. Then, we provide an accessible description of the key features of logical qubits, and we discuss the fault-tolerant requirements for different quantum applications. Finally, we discuss IonQ’s strategy to scale quantum computers to the regime of fault-tolerant applications. This strategy relies on two core principles: building physical qubits with the highest possible fidelity and designing a modular architecture.

The main takeaways from this article are:

- It’s important to push toward fault-tolerant quantum computing, and logical qubits play a key role in it.

- Although the industry has been making great progress, useful logical qubits are really challenging to build.

- Logical qubits differ from each other, and these differences are very meaningful.

- Although logical qubits are supposed to “upgrade” physical qubits, current implementations of logical qubits can be inferior in multiple aspects and can lead to major disadvantages.

- The quality and properties of logical qubits depend on the quality and properties of the underlying physical qubits.

- Rushing to build logical qubits with inferior physical qubits has severe drawbacks.

- Due to its high-quality physical qubits, IonQ has a strong path to a successful implementation of logical qubits.

- Over the next two years or so, using high-quality physical qubits with partial error mitigation/correction will serve as an excellent transition to fault tolerance with a sufficient number of useful logical qubits.

- Like with everything in quantum, the best architecture makes the right tradeoffs so it can produce the best results!

Introduction

The concept of “logical qubit” was born from Peter Shor’s 1995 article introducing the first quantum error correction code. The basic idea is to group multiple physical qubits together and cleverly use them in concert as a robust virtual qubit that is resilient to error in both the retention of information (memory) and the execution of logical operations (gates). In simple terms, logical qubits became widely understood as the reliable “software-defined” qubits built from relatively noisy “hardware” or “physical” qubits.

The related term “fault tolerance” was introduced in the quantum computing literature in 1996, again by Peter Shor in his seminal paper “Fault-Tolerant Quantum Computation”, which discusses computation on logical qubits. In this paper, the term “fault tolerance” is used informally and is left undefined, but the idea is clear: quantum error correction and operations on logical qubits must work even when implemented with noisy components. Precise definitions of fault-tolerance appeared later, in particular, to prove the so-called ‘threshold theorem’, a major theoretical breakthrough (see also the so-called AGP proof and Gottesman’s excellent review). Today, different mathematical notions of fault tolerance are used to prove theoretical results, and the exact notion of fault tolerance depends on what the authors want to demonstrate.

Since 1996, quantum computing has progressed immensely, and functioning quantum computers can be found around the world, using a diverse range of technical approaches, known as qubit modalities, each with its own unique set of advantages and weaknesses. The entire industry recognizes the concept of fault tolerance as its proverbial north star, but the path there, the expectations along the way, and the meaning of related terms have become very confusing even inside the quantum industry, to say nothing of those wishing to engage with it (customers, investors, analysts, etc.).

This challenge became more visible recently as multiple companies started directing their attention, and the attention of industry watchers, toward early logical qubit research. While this has produced a variety of technically and scientifically impressive outcomes, these frequent announcements and publications rarely include the full context necessary for the layperson to genuinely understand the contribution of each accomplishment along the larger path of developing and delivering logical qubits.

The unfortunate consequence of this tremendous progress is more confusion, more hype, and a general feeling that “it’s all about logical qubits now,” because if logical qubits are about to take us into a world of error-free quantum computation, why do physical qubits still matter at all? As you will read in this article, this could not be further from reality, and we hope that the explanations below will help inform those who wish to learn more.

In the absence of error-free quantum computation, the industry is currently in a phase that is commonly referred to as “Noisy Intermediate-Scale Quantum” or NISQ (coined in 2018 by John Preskill). In this phase, the number of qubits available for computation is relatively low, and noise is gradually being engineered out, but still expected and ever-present.

Logical qubits hold the promise of ushering us all from NISQ into the world of Fault Tolerant Quantum Computation (FTQC), but this transition may not be the sudden change that some are hoping for. Because “fault tolerance” has no formal definition in terms of the minimal level of noise (as long as it’s lower than the physical qubits), we may first observe a few systems featuring thousands of physical qubits, trading immense cost and other performance disadvantages for a few hundred logical qubits with an error rate barely better than good physical qubits (and maybe even worse than the very best physical qubits out there).

At present, high-quality physical qubits are already a great solution for generating early commercial value through “NISQ” algorithms. Investment in improving physical error rates is still critical, as the quality of physical qubits directly impacts the logical qubits you make with them — many error correction schemes even have a minimum physical error rate (a “threshold”), above which they do not work at all. Furthermore, improvement in the quality and functionality of physical qubits is multiplicative when implementing logical qubits from them: 2x reduction of the error rate of the physical qubits can result in a 4x reduction of the logical error rate using a code capable of correcting 1 error, an 8x improvement using a code correcting 2 errors, and so on. At scale, this can add up to many orders of magnitude more performant logical qubits, and that’s exactly what IonQ is working toward with the highest quality physical qubits in the industry as its foundation.

Not all logical qubits are the same

The general notion of using multiple physical qubits to create one higher-quality “logical” qubit is correct and does not cause any confusion. The fact that creating more and better logical qubits is a critical milestone on the path to achieving FTQC is also not controversial.

But there are lots of ways to create a logical qubit, and this simplistic definition lacks the necessary nuance to explain that not all logical qubits are the same, implementations can be incomplete (not fully functional for running fault-tolerant algorithms), and demonstrating logical qubits is not necessarily the same as achieving FTQC, or even achieving lower effective error rates.

Much like with physical qubits, simply counting the number of logical qubits is not nearly enough to produce a clear picture of their ability or utility. To truly understand logical qubits, you need to look at quality (logical error rates and gate fidelities), cost (qubit overhead and classical resources), functionality (compatible gates), and performance (logical gate speeds). While you only need one or two for an interesting demonstration, you have to balance all of them to run valuable algorithms.

For a complete picture, we recommend understanding and comparing logical qubits through five key attributes:

With these five attributes in mind, one can imagine a logical qubit that performs much worse than its underlying physical qubits (more errors, fewer gate options, lower speed, limited gates, etc.), and that is exactly what we see in many of the recent “logical qubit” demonstrations. Often, logical qubit demonstrations focus on one specific attribute, not delivering a complete or useful logical system. These are still great demonstrations on the path to usefulness, and researchers continue to make significant progress in logical qubit development. As can be seen in IonQ’s technology roadmap (below), we are well-positioned to deliver fully functional logical qubits at a higher scale significantly sooner than others, since the underlying foundation is more suited to this application.

.png)

Achieving fault tolerance

One of the common misconceptions about fault tolerance is that it’s a binary condition: either you’re working with noisy physical qubits or perfect logical qubits, and that you “turn on” fault tolerance like a switch. Reality is much more nuanced; like many other parts of quantum technology, it’s best not to think in binary here either, and instead think in terms of your noise tolerance: how much noise do you have to start with, and how much do you need to get rid of to successfully run a given application?

In practice, an end user will always have a specific application in mind, and that application will require (based on its gate depth and other factors) a specific target logical error rate, not an arbitrarily low one. Based on that target, a noise “budget” can be calculated, and with an appropriately reconfigurable architecture — like IonQ’s — different encoding choices can be made that target a specific, necessary amount of fault tolerance, and while there are certainly problems that require extremely low noise, some applications might require to be accessible with less stringent fault tolerance requirements, offering more logical qubits to work with.

A more pragmatic mental model is to think of a spectrum that starts with very noisy results, and gets better, up to a point that is, for all intents and purposes, “error-free.” Along this gradient, we can place different applications or use cases, each with its own tolerance for noise. Some NISQ algorithms are incredibly resilient to noise. Some algorithms actually benefit from the introduction of certain amounts of randomness in the calculation, for example, by using this randomness to help them avoid local minima and barren plateaus in optimization challenges. Algorithms can tolerate some noise up to a certain level, which determines where they are placed along that spectrum.

Current quantum computers are often referred to as NISQ devices, but the “NISQ Era” does not end the moment a few early logical qubits become available. Just for context, fault tolerance in enterprise-grade classical computers is on the scale of less than 1 error per million hours of compute (<100 FITs). Roughly translated, that’s something like ~10–20 errors per instruction executed. In quantum computing, we expect we’ll need to get the logical error rate to the order of $10^{-10}$ to $10^{-15}$ for textbook large-scale fault-tolerant algorithms (that’s 1 error for every $10^{10}$ to $10^{15}$ error correction operations).

The expected fidelity of any near-term logical qubit sits well within the noise tolerance spectrum. Those systems that start with the highest quality of physical qubits, and leverage the best architectural choices, will be able to make “cheaper” and smarter trades, push the performance of logical qubits further for less, and enable larger and more demanding applications on nearer-term devices.

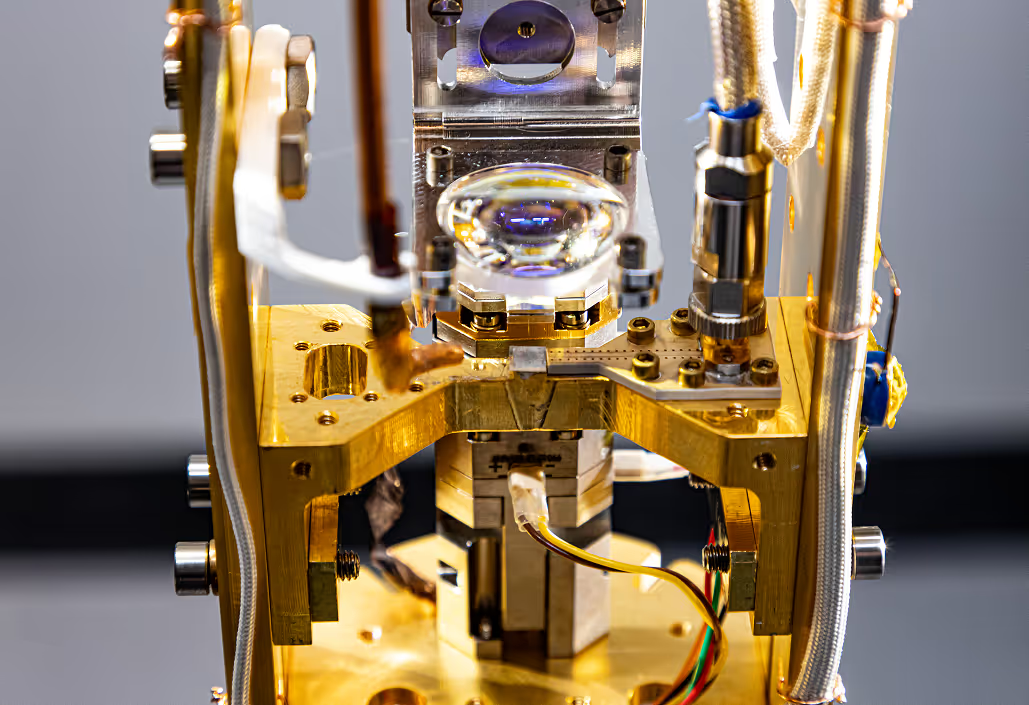

IonQ's natural advantages

At IonQ, we chose to invest deeply in harnessing the highest quality physical qubits available — atomic ions, “nature’s qubit” — and the best possible mechanism for isolating them from as much noise as possible: suspended in free space using precision electromagnetic fields in an extreme vacuum chamber. Furthermore, of all of the options for “nature’s qubits,” we decided to choose Barium, an atomic species that experiences less stray photon scattering, suffers lower heating near trap surfaces, has simpler state preparation and measurement pathways, and has one of the highest possible fidelity potentials of any possible ion qubit.

With IonQ’s acquisition of Oxford Ionics, we now have access to technology capable of 99.99% physical two-qubit gate fidelity, before having to rely on various error mitigation and correction techniques to further reduce our systems’ logical error rate. As of October 2025, this fidelity target is higher than any logical qubit demonstration, without any of the limitations and complications associated with encoding logical qubits, compiling algorithms for logical qubits, performing many rounds of error correction, and so on. This means that even before fault tolerance, an IonQ system with only 100 physical qubits delivering 99.99% fidelity will likely greatly outperform a quantum system with 10,000 lower-quality physical qubits forming 100 logical ones — less overhead, full universal gate operations, faster speed, lower energy consumption, etc. Implementing logical qubits in IonQ’s architecture further compounds these advantages, providing more-performant logical qubits for many fewer resources.

IonQ's approach to logical qubits

IonQ’s approach to building logical qubits in a scalable and effective manner is based on a few key technical choices:

- Using the best possible physical qubits.

- Forming 2D arrays of ions with all-to-all connectivity between them.

- Building modules around each collection of ions.

- Interconnecting modules for any necessary size of computation.

The heart of this approach is a quantum error correction protocol tailored to the hardware. IonQ scientists recently introduced new error correction codes called BB5 codes, which are a variant of the bivariate bicycle (BB) codes. Using the BB5 codes, the scientists were able to show how it can leverage physical qubits capable of 99.9% fidelity, and achieve the initial idle logical error rate of $5\cdot10^{-5}$ (99.995% fidelity) — four times smaller in error rate than the best ‘vanilla’ BB code — with up to 50 physical qubits. If we assume the full fidelity potential of Barium, using BB5 would add an extra 9 and give us an idle logical error rate of 99.9995%. These might seem like tiny differences, but across many, many operations on many entangled qubits, these error rates compound rapidly, and even minor differences like this can mean the difference between a successful error-correcting code and errors spinning out of control.

In addition to building new and more efficient quantum error correction codes, the scientists also recently proposed a distributed architecture for a fault-tolerant quantum memory based on BB codes capable of achieving the very low logical error rate required for large-scale FTQC applications. This design can be used for both long and short chains of trapped ions, and is well-suited to the Oxford Ionic technology, illustrating the flexibility of trapped ions.

Ultimately, like most things in quantum computing, good architecture has a huge impact on the quality of the results. Building a useful quantum computer requires a balance between multiple competing priorities. IonQ is leading the way in demonstrating these balances for several generations of quantum computers. While some companies focus on achieving the fastest gate speeds or only maximizing fidelity at all costs, IonQ remains highly focused on the optimal tradespace for building useful applications. This means balancing fidelity, connectivity, shuttling, and gate operations to provide the most utility and commercial viability.

To learn more about IonQ’s architecture and how movable qubits enable efficient and modular layouts for error correction, see another recent blog post: Reimagining Error Correction to Advance Modular Quantum Computing.