We recently announced our collaboration with Hyundai to use IonQ quantum computers for image recognition in autonomous vehicles. We would like to share some details on how this exciting research is being applied to transform the mobility industry.

This project builds on an existing relationship with Hyundai to help them solve challenges along the path to a new generation of electric, self-driving vehicles. Since January of 2022, we have been partnering with them to model molecules useful for battery research using our quantum computers.

Our new collaboration isn’t only relevant for “self-driving” cars. Short of full automation, drivers can benefit from other advanced driver assistance systems that enhance safety and convenience. Cruise control was the first simple technology of that type. Then came lane-keeping assist, automatic emergency braking, and blind spot warnings. Even if you never intend to purchase a self-driving car, technology that improves recognition of road signs and pedestrians can still make your life easier and reduce your risk of being in an auto accident.

Hyundai is envisioning ways that fully self-driving cars can be redesigned to become living space in addition to transportation. They are exploring new seat configurations that turn cars into living rooms or work spaces, along with novel air bags that will make those configurations safe. By working to make reliable software that recognizes changing road conditions, they can turn commutes into leisure time.

Our research with Hyundai into this technology is broken into two primary areas.

First, image recognition of road signs. We used a library of 43 different road signs and started testing quantum machine learning models on our quantum simulators. So far we have tested up to eight of those on IonQ Aria, going as high as 16 qubits. Of course, 16 qubits is not enough for commercial use. The real point is that we now have enough data on enough classes of signs and on enough qubits to be able to extrapolate at what point in quantum computing advancement this method of image recognition will become commercially relevant.

The second primary research area is object detection. When a quantum computer is provided with an image of a street scene from the perspective of a car, we consider scenarios that may allow us to resolve potential obstacles and risks.

Two different tasks must be tackled by the quantum computer, bounding box prediction and object classification. The first task involves figuring out which parts of the image represent the background. Buildings, the sky, trees outside of the road area. This allows the quantum computer to distinguish objects of interest that are not just part of the background. It will then draw a box around each of those objects to precisely predict the object location. These boxes can overlap and may be of any size. The second task involves determining which type of object appears in each of these boxes. A cyclist, another car, a dog, a pedestrian. Both tasks will be accomplished by training a model and comparing its predictions with KITTI, a standard data set of road images which were created at the Karlsruhe Institute of Technology to provide real-world computer vision benchmarks. Put together these two tasks form the object detection algorithm.

Our work on this begins by running these operations on a quantum simulator, and then on IonQ Aria, currently the world’s most powerful quantum computer.

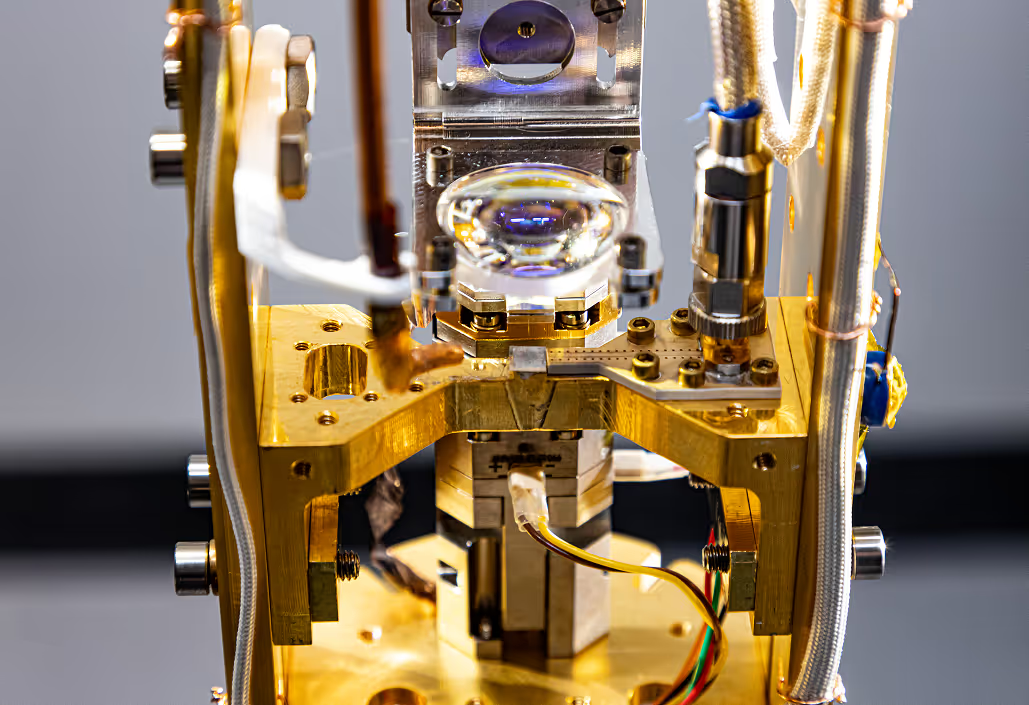

Right now it would be impossible to operate a quantum computer in a moving vehicle. They are relatively large and wouldn’t handle the jolt of a pothole very well. But our systems are getting more powerful and stable every few years and our technological roadmap calls for shrinking them to something smaller.

Software demonstrators have always run years ahead of what can be deployed on commercially available hardware. That way the new hardware becomes as useful as possible as quickly as possible because the programmers have already finished thinking about how to dissect a problem into the pieces that allow for a solution.

For example, one of the first text editors was O26, developed in 1967 for use on the CDC 6000 series of supercomputers, which were about the size of four large refrigerators. The flagship 6600 model of the series boasted a 10 MHz processor with 982K of memory and only about a hundred were made. O26 didn’t exactly invite anyone to write the great American novel on it. Or even a memo. But it enabled the creation of word processors. Today it would be difficult to name more than a handful of books or memos written in the last 25 years that did not begin with a blinking cursor in the upper left hand corner of a program like Word or Word Perfect.

Figuring out how to do something on a large, early-generation computer has value, even if you can’t put it in a consumer’s living room, pocket or car yet. The companies that are doing this today, like Hyundai, hope to lead their sectors a few years from now as quantum computers are scaled up in power and scaled down in size.

To find out more about how you can position your business to benefit from the rapid growth of quantum computing, contact our sales team.